Table of Contents

What worked in the 4G era will not cut it now. Learn how to validate real user journeys across 5G and IoT, and use AI to speed feedback without cutting corners.

Mobile technologies underpin today's global digital economy, driving $6.5 trillion (5.8%) of global GDP in 2024, a figure projected to rise to $11 trillion by 2030. This rapid growth is fueled by the expansion of 5G networks, the rise of IoT ecosystems, and the integration of AI elements.

Together, these technologies are changing not just how mobile apps are built, but how they need to be tested. Users now expect instant, seamless experiences even as apps become more complex. Traditional testing may struggle to keep up, making it essential for QA teams to adapt their processes, tools, and skills to meet these new demands.

So, what actually shifts day to day: how does 5G alter performance expectations? How do AI features and IoT multiply testing complexity? And how does AI help QA teams keep up?

We are here to help you find out:

Transforming the performance testing landscape with 5G

5G is not just faster; it is resetting expectations. Streaming starts instantly, AR feels smooth, and everyday apps work without hesitation. The challenge is that when everything feels immediate, users stop noticing speed and start noticing any interruption.

This is why testing needs to advance as well. It must ensure stability even when networks fluctuate, maintain strong security as more users connect, and confirm that performance holds across the wide range of devices in use. The reality is that fast is no longer a competitive advantage; it is the baseline, and testing goals need to reflect that.

Leading innovators like a1qa, of the IT services industry, are already driving this change by modernizing testing strategies for next-gen mobile environments.

What to focus on

- Connectivity and mobility: verify behaviour as speed and signal change, and as the device moves between coverage areas. Utilize 5G-ready test environments to identify and resolve distribution and handover issues promptly.

- Security and privacy: With more connected devices and data, conduct security testing before release.

- Device and network compatibility: test on a broad set of 5G?capable and non?5G devices to mirror real end?user conditions.

- Performance: check throughput, responsiveness, and stability at higher loads typical of 5G use.

Testing setups built for the 4G world struggle to keep up with what 5G apps experience in reality. The speed, low latency, and shifting network conditions of 5G create scenarios that older environments simply cannot replicate. This leaves a gap between lab results and real-world performance, a gap that needs to be closed to ensure apps work as expected once they reach users.

An example could be engineers simulating a commuter trip so the phone moves between strong 5G, crowded 5G, and 4G. At the same time, hit the servers with surge-level traffic from thousands of users. Such a test can surface issues that appear only when the device switches from 5G to 4G or when the network is congested among others.

IOT and scaling testing across device ecosystems

Connected products now play a role in both high?stakes environments like healthcare and smart cities, and in everyday spaces like retail. In critical areas, there is no room for a testing gap. And in daily use, reliability shapes customer trust and brand reputation. If these systems fail when people rely on them most, what is the cost to safety, trust, and the brand? Security, performance under heavy use, and smooth operation across all devices and platforms have to be top priorities.

What to focus on

- Security first: run penetration testing and vulnerability assessments across the network, cloud, and apps to protect sensitive data and the system as a whole.

- Performance and scale: execute load and stress checks and verify scalability as device counts grow.

- Compatibility and interoperability: plan for varied phones, OS versions, browsers, and connectivity. Conduct compatibility testing to ensure that all elements work together seamlessly.

- Risk-based testing strategy: failures can have serious consequences in medical or civic contexts, so align test depth with impact.

The challenge extends well beyond testing individual devices. In the IoT ecosystem, a wide range of devices, platforms, and protocols must work together seamlessly.

It is not enough for each component to function in isolation; the entire system needs to communicate, share data, and respond in sync. This interconnectedness adds a significant layer of complexity to testing.

Let’s imagine a smart building that’s about to go live. Engineers might simulate an entire smart office tower with tens of thousands of lights, thermostats, and access points, then trigger a lunchtime peak.

The test sends a firmware update to all devices, floods the system with occupancy changes, and drops connectivity on random floors. It can reveal problems that appear only at scale, like delayed badge authentication, misreported headcount, or runaway retry loops, so they are fixed before the building goes live.

Making AI features dependable at scale

AI now powers search, support, and personalisation in mobile apps. It succeeds when responses are fast, accurate, and safe. It fails when answers vary without reason, drift after small changes, or include hallucinations. Because AI is probabilistic, the same input can produce different valid outputs.

Small edits to prompts or data can shift behaviour across the system and erode trust. Testing must measure quality, track versions, and prove stability over time. Inaccurate AI damages user trust in your software. To achieve this, collaboration with AI development companies ensures that best practices in AI reliability and testing frameworks are consistently applied.

What to focus on

- Groundedness and attribution: require answers to cite approved sources and measure groundedness, relevance, fluency, and hallucination rate. Block release when scores fall.

- Prompt and model control: version every prompt and model and run regression suites with a golden dataset after any change.

- Task-level tests first: define the smallest units of behaviour and test bottom up before end-to-end.

- Context and retrieval: validate RAG completely, not just retrieval. Check that injected context is actually used by the model.

- Edge cases and drift: target rare scenarios and monitor in production to catch performance changes over time.

Traditional exact-match checks struggle with probabilistic outputs. Replace brittle asserts with reference answers, scorecards, and targeted human spot reviews, and log prompts, versions, and scores so defects are explainable and repeatable.

An example could be a retailer testing an AI assistant. Replay a golden dataset of customer questions across three prompt variants and two model versions, and capture groundedness and fluency scores. Inject poor or missing retrieval results to confirm the model falls back to scripted FAQs without disrupting the checkout flow. This approach shows stability despite valid output variation and proves that context is being effectively utilized.

The future is now with AI-enhanced testing

Not long ago, AI felt experimental. Today, it is a practical way to speed up testing and widen coverage. It helps teams set priorities, generate and maintain test cases, identify visual issues, dynamically adjust performance tests, self-heal regression suites, and tailor reporting for stakeholders.

The result is faster cycles, fewer manual steps, and broader risk coverage. So, where does AI fit into a testing environment today?

These capabilities are now being integrated into broader IT services strategies to deliver continuous testing and smarter automation.

High?value uses

- Prioritisation and case generation: focus on the riskiest areas first.

- Visual and performance testing: catch subtle UI defects and simulate real-world loads.

- Self?healing automation: keep suites stable as UIs change.

- Reporting automation: surface KPIs for managers and provide technical details for engineers.

AI in testing represents strategic advantages that enable faster development cycles, broader risk coverage, and reduced manual effort.

When worlds collide

The long-term challenge isn't mastering these technologies individually, but understanding how their convergence creates entirely new application categories that require integrated testing approaches.

Consider remote patient monitoring, where clinical-grade wearables stream ECG, SpO?, and temperature data over 5G networks to hospital clouds, triggering AI-assisted alerts for doctors. These applications rely on seamless integration, where IoT medical devices must transmit data reliably over ultra-low-latency 5G connections to maintain real-time clinical accuracy. Or think about connected cars, which share information with other cars and cloud servers; the ability to operate under changing network conditions is crucial here.

So what does testing need to cover when these technologies work as one system?

Integration testing requirements

- Unified system validation: testing must treat IoT devices, 5G networks, edge computing, and cloud infrastructure as integrated ecosystems rather than separate components, ensuring data flows reliably across all system layers.

- Real-world environment simulation: validation requires actual 5G networks or controlled operator laboratories to confirm how IoT devices perform under varying network conditions.

- Integrated security frameworks: organizations should develop security policies addressing unique vulnerabilities created when IoT devices transmit sensitive data over 5G networks, following GSMA IoT Security Guidelines and NIST 5G guidance.

- End-to-end performance validation: applications must maintain consistent experiences as IoT devices switch between 5G and legacy networks while processing high-volume sensor data, requiring testing frameworks that simulate these complex, interdependent scenarios.

Traditional approaches that evaluate each component separately overlook the complexity that leads to system reliability. When IoT medical devices fail to communicate properly over 5G networks, or when network handovers disrupt vital-sign data transmission, the entire clinical workflow can falter, resulting in potentially serious operational and patient-safety consequences.

What this means in the real world

When mobile testing is treated as a business advantage, products launch faster, customers complain less, and the apps people download actually work the way they should.

Shaped by the mix of 5G, IoT, and AI, mobile testing is moving into a new stage. The old ways of testing are no longer enough. Environments need to be upgraded to handle higher speeds, more connected devices, and complex system interactions. Forward-looking organizations are expanding their product engineering services to include 5G, IoT, and AI testing integration at scale.

AI can help by making testing smarter and more efficient, but only when it is paired with skilled teams who know how to apply it effectively. Success depends on aligning development, operations, and business goals so quality is built in at every stage.

Companies that adapt will deliver stronger, faster, and more reliable products, while those that do not risk falling behind.

Recent Blogs

The Role of Artificial Intelligence in Modern Law Firm Growth Strategies

-

03 Mar 2026

-

6 Min

-

104

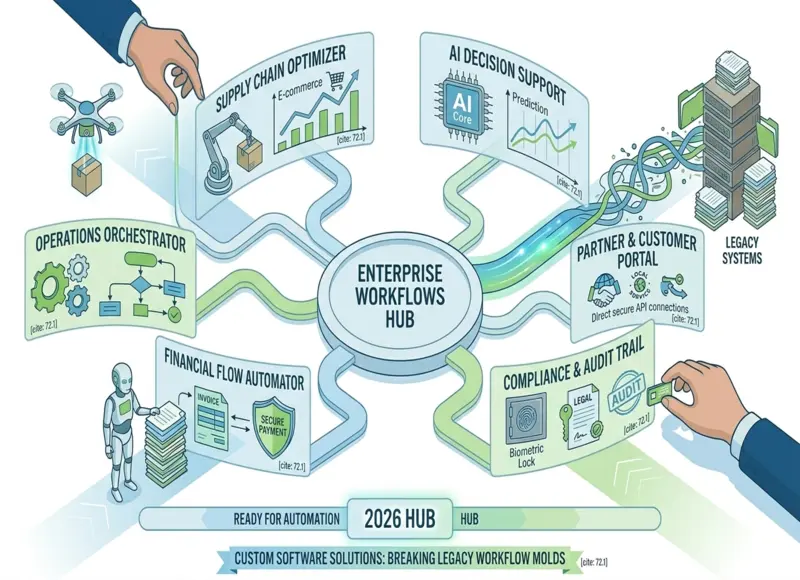

How Custom Software Companies Help Enterprises Automate Complex Workflows

-

03 Mar 2026

-

5 Min

-

127