Table of Contents

Not all automation savings are real. Learn how to track ROI for Writing Automation as a Service tools using practical metrics across efficiency, quality, costs, and outcomes to make smarter content investment decisions.

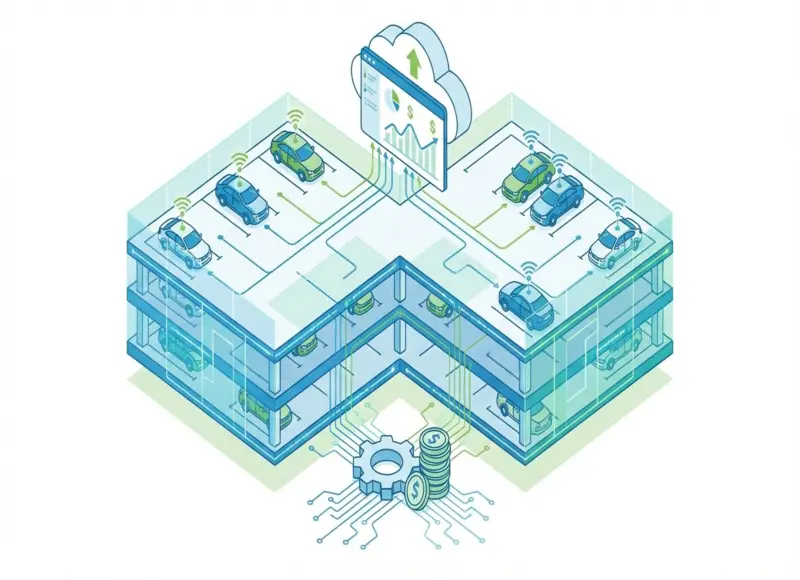

Writing Automation as a Service tools can feel like a shortcut. You drop in a brief, and you get a draft back almost immediately. At first, that speed alone can feel like ROI. But once you start paying monthly and building workflows around the tool, the real question changes. Is it saving time without creating new problems? Is it lowering costs without lowering quality? And is it actually helping content perform?

That is why ROI metrics for Writing Automation as a Service tools need to cover more than output volume. “We generated 200 drafts” is not a business result. A better ROI story looks at four areas at once. How fast you publish, how much you spend, what quality looks like, and what outcomes content produces. If any of these areas slip, the ROI you think you have can disappear quietly.

What does ROI mean when writing is automated

ROI sounds simple. You invest resources, and you get value back. In writing automation, the complication is that the value shows up in multiple places. Drafting can become faster, but editing can become heavier. The team may publish more, but not every page will rank. You might save on outsourcing, but spend more on review, compliance, or fact-checking. So the safest way to measure ROI is to treat it as a balanced system rather than a single number.

A helpful way to think about it is like upgrading a kitchen. A better oven is not only about cooking faster. It also affects consistency, waste, and how much time people spend fixing mistakes. Writing automation works the same way. Speed matters, but so does what happens after the draft is generated.

Performance metrics that show whether automation improved results

Eventually, ROI has to connect to outcomes. Otherwise, you only proved that you can produce content faster, not that it helps the business. Another practical way to connect ROI to outcomes is to pick one high-intent request and follow it through the funnel. In student-focused products, spreadsheet help often works well because users expect clear steps and a correct result.

When someone says, “Do my Excel homework online,” the output should include the exact formulas, a short explanation of why they work, and a clear next action on the page. Track time from request to publishable content, the number of editor fixes, and the conversion rate from that flow into a trial or paid plan. If all three improve at the same time, you can point to ROI that holds up in a budget conversation.

For SEO focused teams, impressions, clicks, and rankings are the most direct signals. But it’s important to look at these metrics over enough time. Some content takes weeks to rank, and some updates can have a delayed impact. A practical approach is to track performance in cohorts. Compare content published before automation versus content published after automation, over the same time windows.

Another strong ROI indicator is indexation speed. If your publishing cadence improves and Google indexes your pages faster, you are shortening time to value. That matters in competitive topics, where being late means fighting uphill for months.

Conversions are the next step. Not every content team has perfect attribution, but you can still track meaningful conversion signals. Look at direct conversions from content pages when possible.

Then look at assisted influence, meaning how often content appears in the journey before a user converts. If you have a CRM, you can add another layer by tagging leads that consumed content before becoming qualified.

The key is not to chase perfect attribution. It’s to build a consistent measurement approach that shows whether content influenced business outcomes more than it costs to produce.

Efficiency metrics that show real workflow improvement

Most teams first feel ROI as a speed boost. But you want to measure speed across the whole workflow, not only the generation moment. If you only track “time to draft”, you might miss that approval rounds increased, or that editing now takes longer.

The most meaningful efficiency metric is the full cycle time from brief to publish. It captures everything. Research, drafting, editing, formatting, SEO touches, and internal review. If that number improves, your process is truly faster. If it stays flat, automation might only be shifting work from one stage to another.

Another important angle is whether speed becomes reliable. Writing automation often reduces variability. Instead of some drafts taking three hours and others taking eight, the team gets more predictable output. Predictability is underrated because it helps with planning and reduces deadline stress. You can measure that by tracking how often content is published on time compared to the plan.

Also, watch what I call “waiting time”. In many content teams, the slowest part is not writing, it’s waiting for approvals or feedback. Automation won’t fix that by itself, but if it creates cleaner first drafts, it can reduce revision loops and shorten review cycles. If review time drops, that is one of the strongest signs of real ROI.

Quality and risk metrics that protect your ROI

If speed and cost are the obvious ROI levers, quality is the silent one. You can publish more and spend less, but if quality drops, results will follow. Sometimes slowly, sometimes painfully fast.

Quality can be measured in a practical way. You do not need a philosophical debate about “good writing”. You need signals that show whether the content is publishable, accurate, and on brand.

One of the simplest quality metrics is the major edit rate. How many drafts require heavy rewriting before they can be published? If that rate is high, it means the tool is creating more work than it saves. If that rate decreases over time, it usually means your briefs, prompts, and guidelines are improving, and ROI is compounding.

Another useful metric is factual correction count. How many factual fixes were needed per piece? This is especially important for anything that includes statistics, claims, or references. In fact, corrections are frequent, your team needs a tighter process, like requiring citations for key claims or restricting which topics can be auto-drafted.

Brand compliance is also part of ROI, even if it does not look like money at first. If content repeatedly misses your tone, your terminology rules, or your style requirements, the team will spend extra time correcting it. A lightweight checklist score helps. Not a complicated rubric, just a consistent way to judge whether the draft matches your voice and format.

Finally, track what escapes into the wild. Errors found after publishing are costly because they damage trust and create emergency fixes. If automation increases post-publish corrections, that is a clear ROI warning signal.

Cost metrics that translate speed into money

Time saved matters, but leadership usually wants the financial version. What did it do to costs? Cost metrics are powerful because they can show ROI even before SEO wins arrive.

The easiest metric to work with is cost per published piece. Not cost per draft. Published. This matters because automation can inflate draft volume, and draft volume is not the same as value. If you generate 50 drafts and publish 20, your ROI is tied to those 20.

To calculate the cost per published piece, you combine labor time and tool cost. You look at how many hours writers and editors spend, multiply by their internal rates, and add the tool cost allocated per piece. Tool allocation can be as simple as dividing monthly tool spend by the number of assets produced with it. It does not need to be perfect. It needs to be consistent.

A second metric that often reveals the truth is editor time per 1,000 words. Editing is where ROI can silently collapse. Some teams save hours on drafting and then lose even more hours rewriting and cleaning. If editor time rises sharply after automation, it does not mean automation failed. It means the inputs are messy, or the content type is not well-suited to automation, or the tool needs better guidelines. In other words, the process needs tuning.

Outsourcing spend is another clean cost signal. If you use freelancers heavily for drafting, outlines, or translations, automation may reduce those expenses. But you still want to watch whether you replaced that spending with internal review burden. The best ROI scenario is when outsourcing drops and internal workload stays stable or becomes more focused.

How to keep the article anchor-friendly while still readable

Since you plan to insert anchors later, you want the article to have clean “landing zones”. The best way is to write in medium-length paragraphs, each with one clear point, and avoid dense lists that feel like walls of text.

In practice, that means you can insert anchors naturally in the middle of sentences that introduce a metric, an example, or a transition. For instance, places where you define “cost per published piece”, “brief to publish cycle time”, or “major edit rate” tend to be perfect anchor spots.

Conclusion

Writing Automation as a Service tools can produce real ROI, but only if you measure it across the full system. Speed metrics show whether your workflow truly got faster. Cost metrics show whether savings are real or just moved into editing. Quality and risk metrics protect you from silent performance decline. And SEO plus conversion metrics connect content production to actual business outcomes.

If you track these areas consistently, your ROI story becomes solid. Not “we generated more content”. But “we reduced cycle time, lowered cost per published piece, maintained quality, and improved outcomes”. That’s the kind of ROI that survives scrutiny, and that’s when writing automation becomes a dependable engine rather than a shiny experiment.

Recent Blogs

Identity Verification Software: Features, Benefits, and Use Cases

-

18 Feb 2026

-

6 Min

-

28